Projects

Alongside our core product, we develop and support a range of projects that help fact checkers and journalists work more efficiently and effectively.

Prebunking at Scale

This collaborative project uses AI to identify misleading narratives across Europe before they become viral. By analysing short-form video across multiple platforms and languages, the system detects early signals and helps fact checkers act faster.

Developed in partnership with the European Fact-Checking Standards Network (EFCSN) and Maldita.es, the project brings together leading expertise from across the continent to build shared tools that strengthen the wider fact-checking ecosystem.

Designed as shared infrastructure for fact checkers, PAS combines European expertise, advanced AI models and a consistent methodology to improve monitoring, narrative insight and cross-border collaboration. In 2026 the tool will expand to support over 25 European languages.

MediaVault

MediaVault is a shared, searchable library of fact checks that brings verified work from fact checking organisations around the world into a single structured resource.

Originally developed by the Duke Reporters' Lab in the United States, the system aggregates claim text, verdicts, sources, timestamps and topic information through feeds such as ClaimReview, making it easier to locate, compare and build on existing fact checking efforts.

Now hosted and maintained by Full Fact, MediaVault provides secure, reliable infrastructure that supports large-scale monitoring and analysis across languages and platforms.

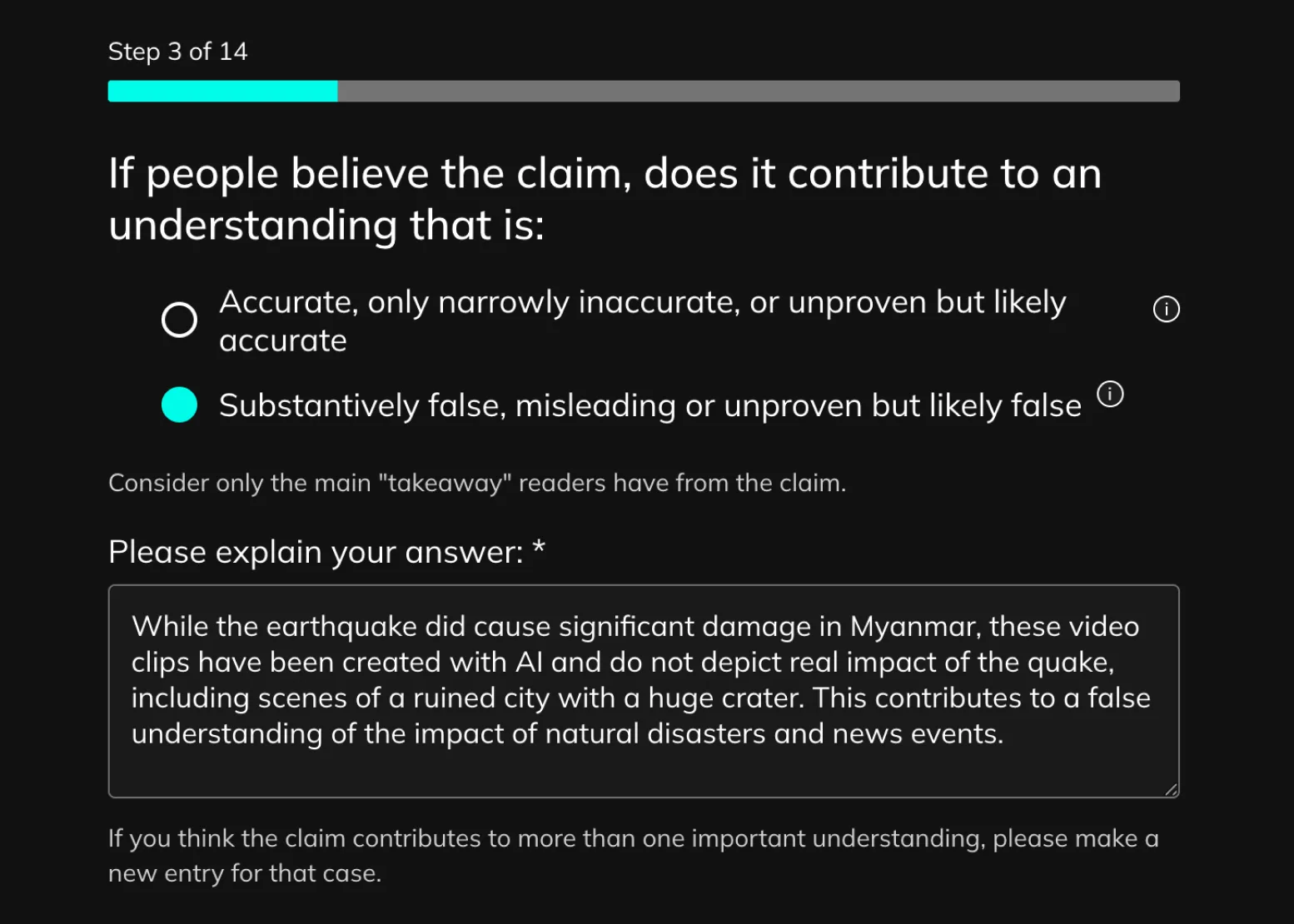

AISI Harms Assessment Tool

Built in collaboration with AISI and Peter Cunliffe-Jones, the Harms Assessment Tool is a structured questionnaire that helps fact-checkers record clear, consistent evidence about the claims they assess and the potential real-world consequences and harms those claims may cause.

Informed by academic research on misinformation effects and extensive trials with fact-checkers, the tool guides users through an adaptive set of questions. Based on their responses, it provides streamlined options, embedded criteria, examples, and AI support to reduce confusion and improve accuracy.

Quality is overseen through a structured human review process to ensure findings remain consistent, transparent, and aligned with the best available research. The project provides a reliable foundation for producing high-quality, verifiable data to support analysis and further study of misinformation and its effects.

Polygraph

At Full Fact, we believe that people need good information to help them make good decisions. But we can only help if we know where people are seeing bad information in the first place.

Over recent years, the information landscape has become ever more fragmented. There's been a steady drift away from a small number of widely-shared sources of news, like national newspapers and TV news broadcasts, towards personalised social media feeds and millions of channels on YouTube and TikTok.

And now people are increasingly getting their information from AI, such as Google's AI mode search and the AI summaries that appear at the top of many search results, as well as through direct conversations with ChatGPT and other chatbots.

Of course, we have no access to the details of individual people's queries and conversations, and nor would we want that.

But as these tools become ubiquitous, it becomes important to track how consistently they provide trustworthy information on everyday subjects.

To track how different chatbots and assorted AI summary tools are responding, we created an internal tool called "Polygraph".

Arab Fact-Checking Network (AFCN) Collaboration

Full Fact AI worked in partnership with the Arab Fact-Checking Network (AFCN) to make advanced fact checking tools available in Arabic. The collaboration supported fact checkers across the Middle East and North Africa with technology, training and linguistic expertise that helped them identify harmful claims more quickly and accurately.

The tools analysed Arabic-language content from news and social platforms, offering features such as real-time monitoring, advanced search and alerts when false claims resurfaced. Extensive Arabic data annotation and model evaluation ensured the system reflected the linguistic and structural complexity of the language, enabling more reliable results in local contexts.

Through this partnership, more than 20 fact-checking organisations across nine Arab countries gained access to AI tools that strengthened their ability to verify information and respond to fast-moving misinformation.